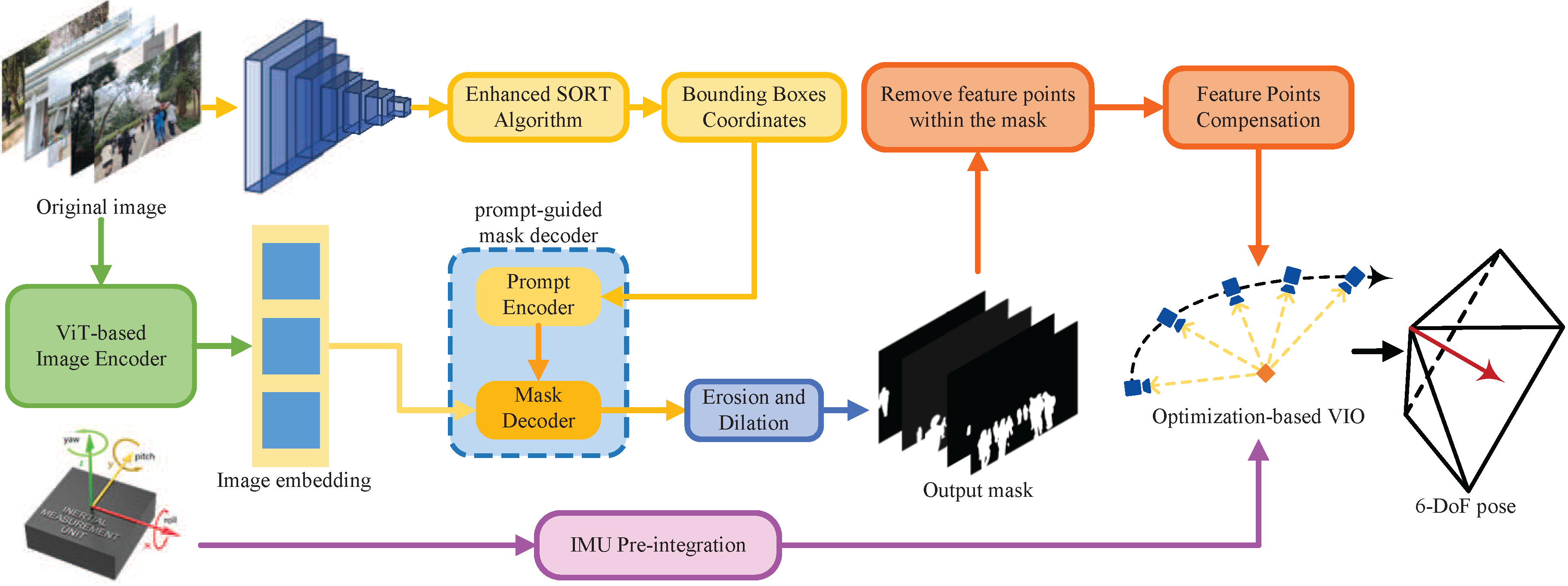

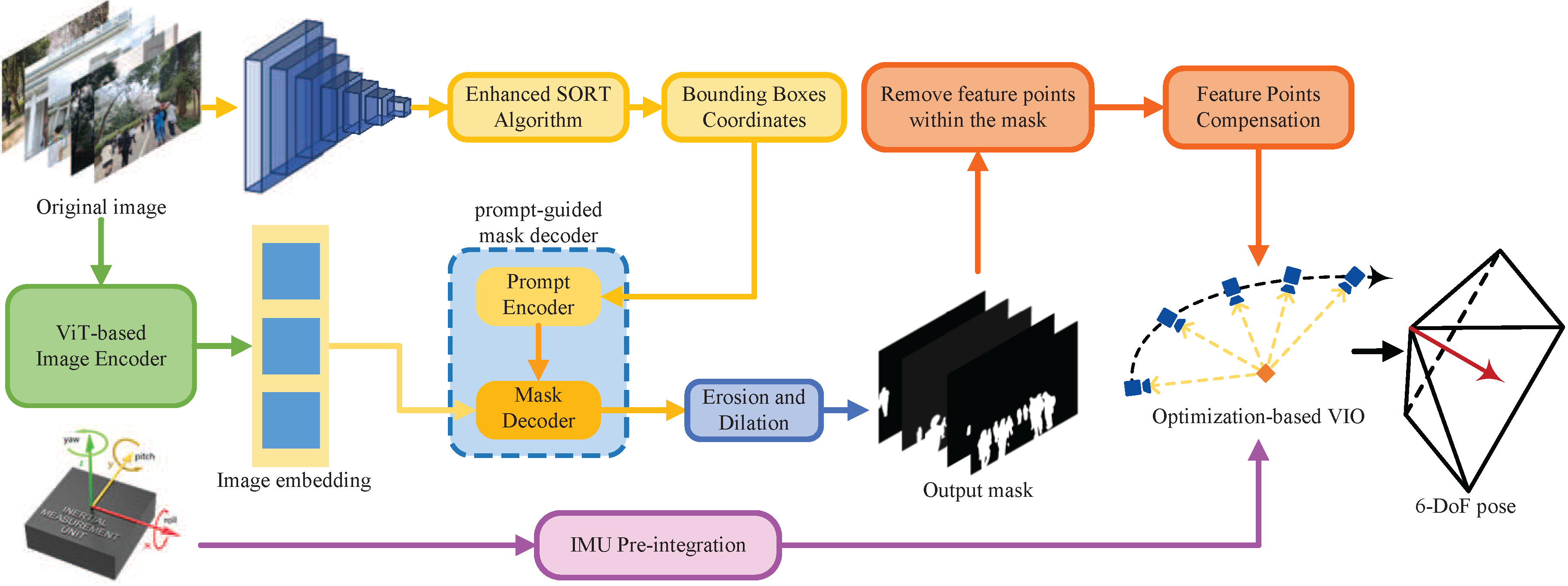

Overview of ADUGS-VINS.

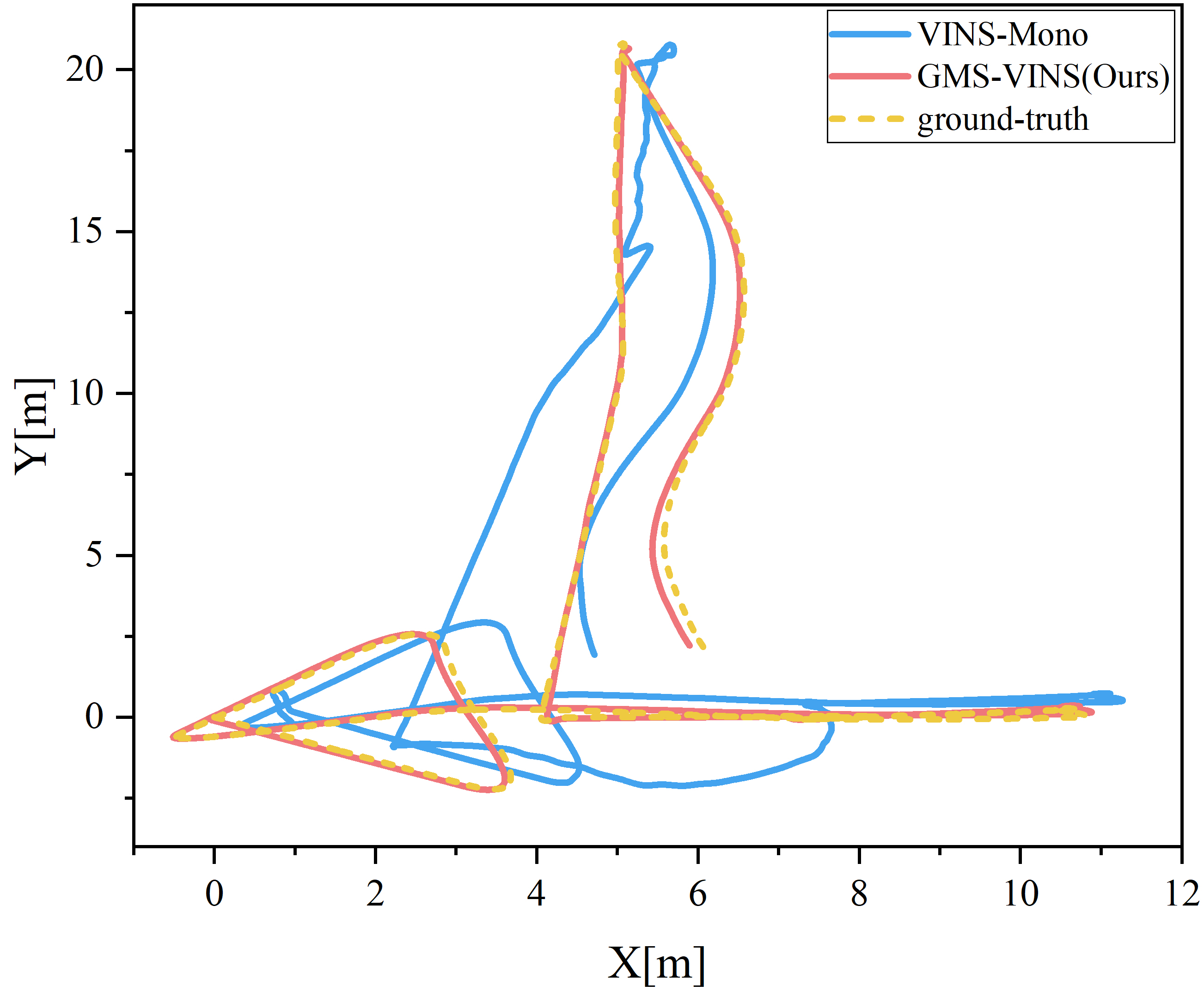

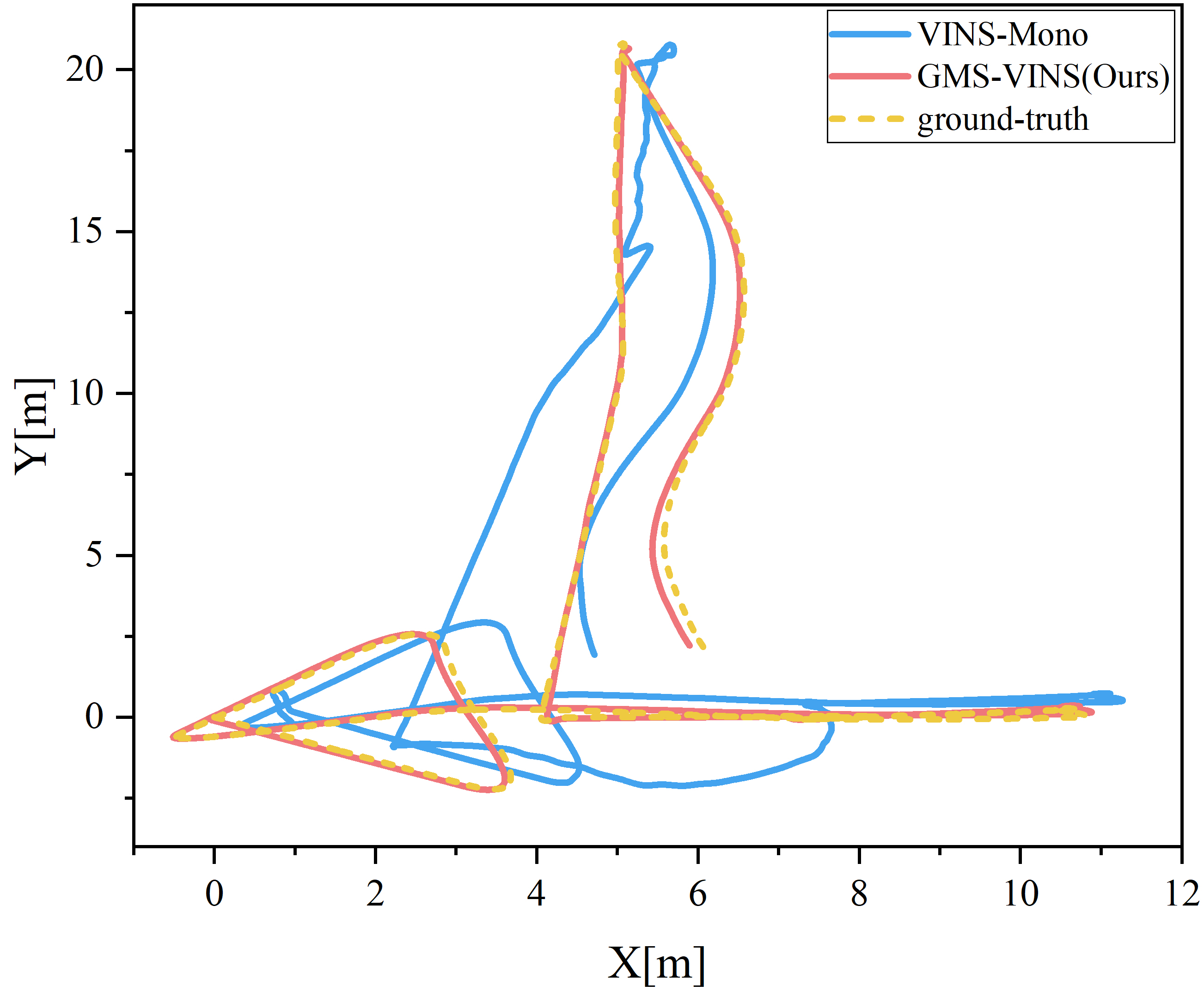

VIODE Dataset parking lot environment

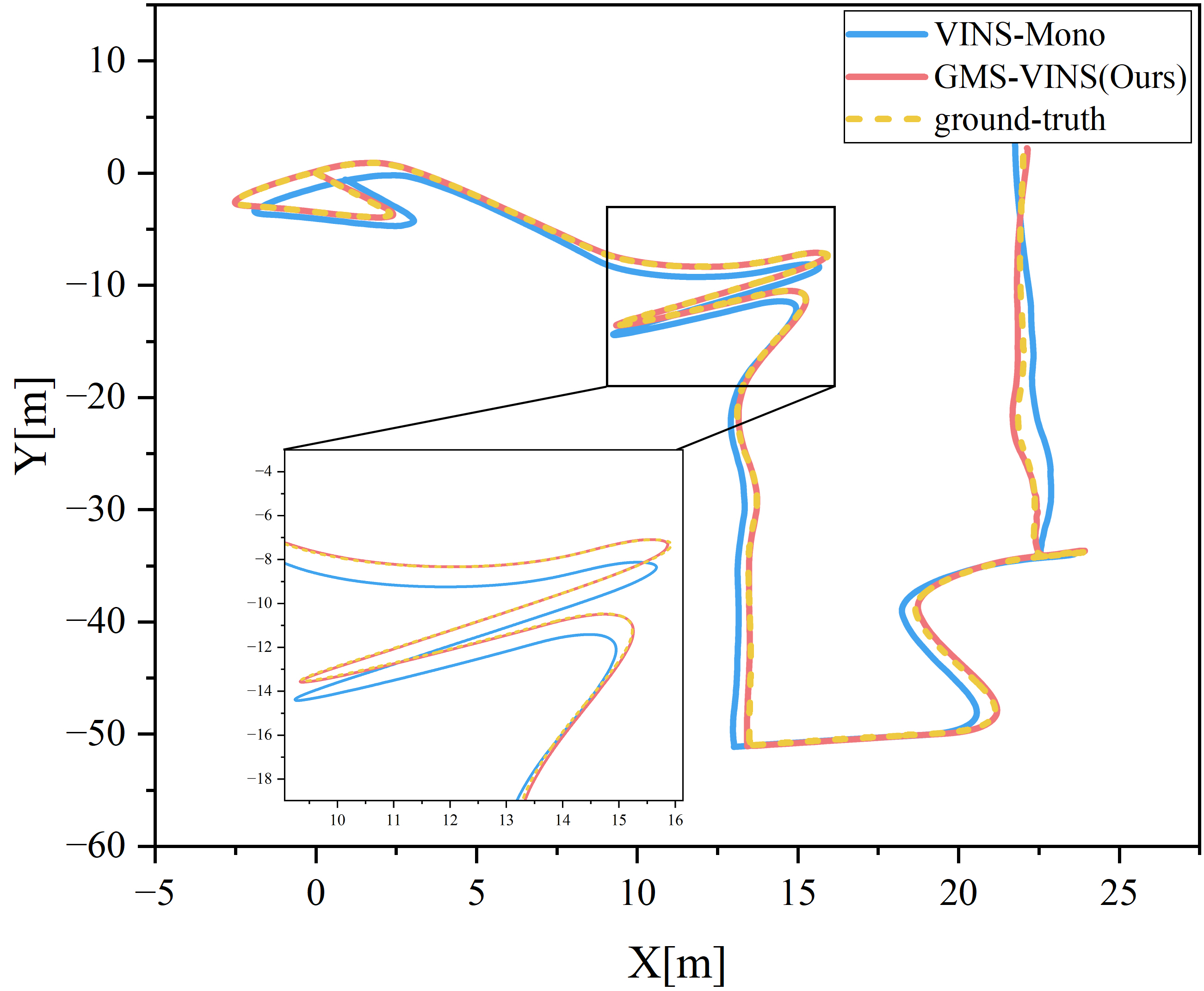

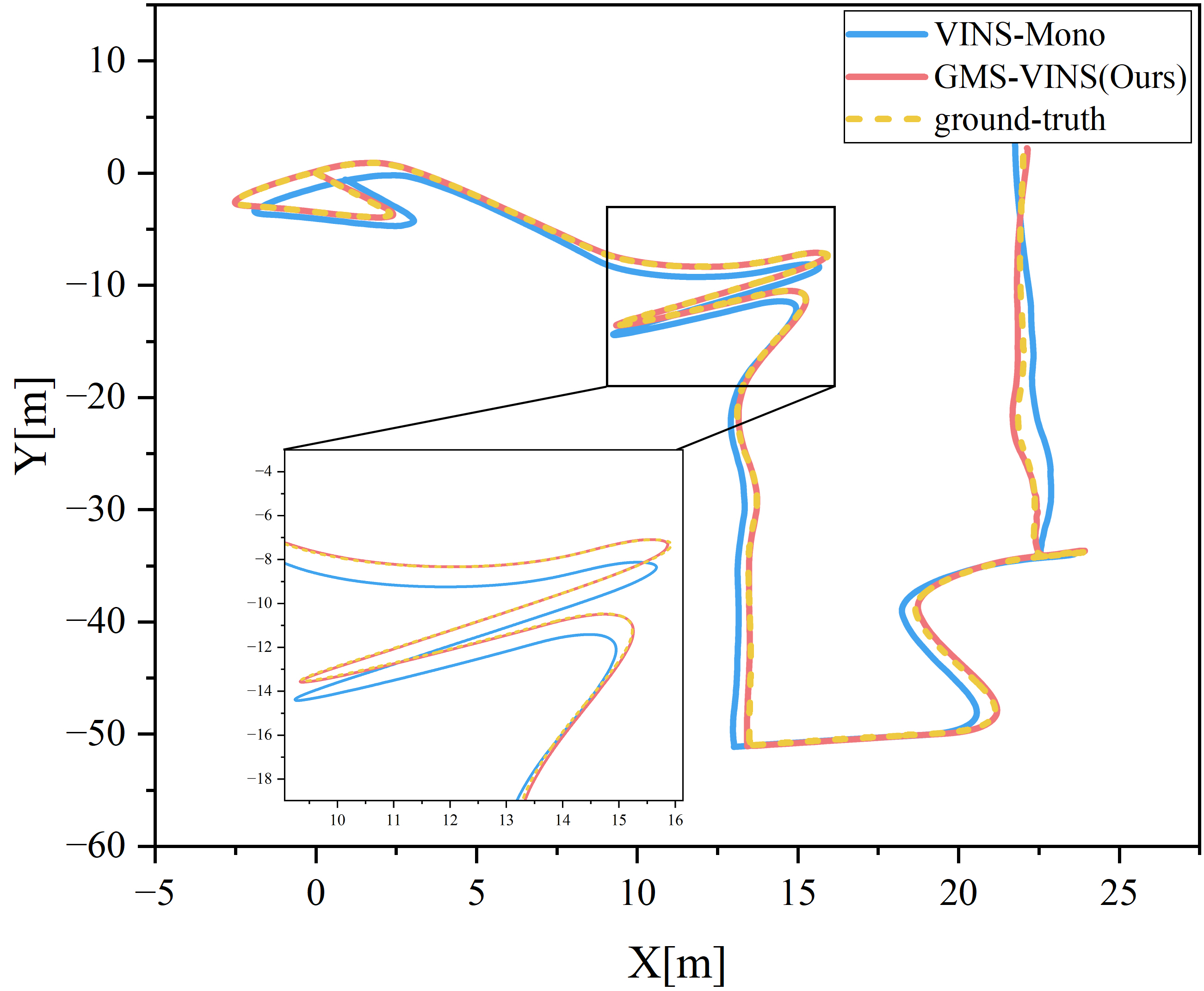

VIODE Dataset city day environment

Visual-inertial odometry (VIO) is widely used in various fields, such as robots, drones, and autonomous vehicles. However, real-world scenes often feature dynamic objects, compromising the accuracy of VIO. The diversity and partial occlusion of these objects present a tough challenge for existing dynamic VIO methods. To tackle this challenge, we introduce ADUGS-VINS, which integrates an enhanced SORT algorithm along with a promptable foundation model into VIO, thereby improving pose estimation accuracy in environments with diverse dynamic objects and frequent occlusions. We evaluated our proposed method using multiple public datasets representing various scenes, as well as in a real-world scenario involving diverse dynamic objects. The experimental results demonstrate that our proposed method performs impressively in multiple scenarios, outperforming other state-of-the-art methods. This highlights its remarkable generalization and adaptability in diverse dynamic environments, showcasing its potential to handle various dynamic objects in practical applications.

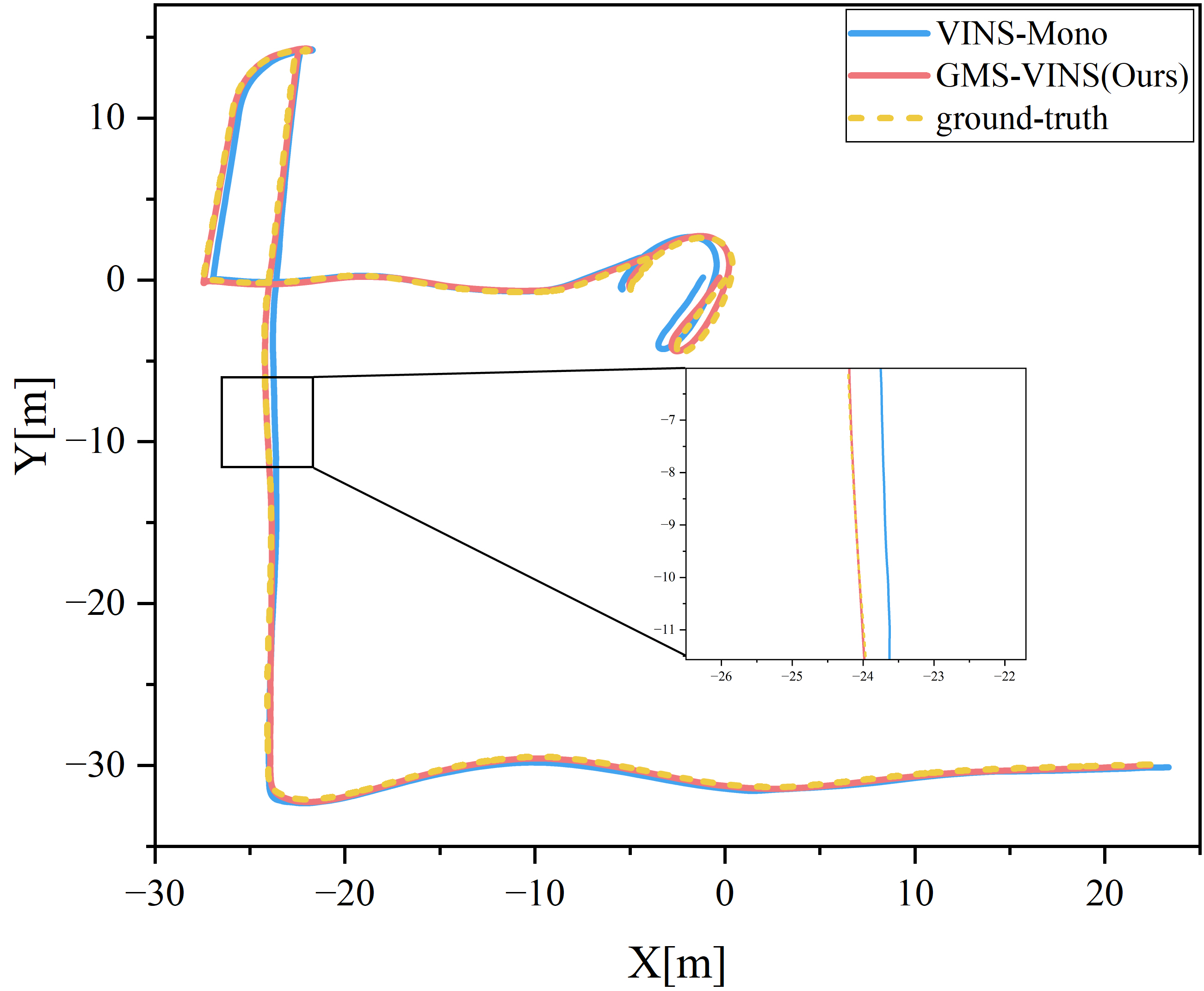

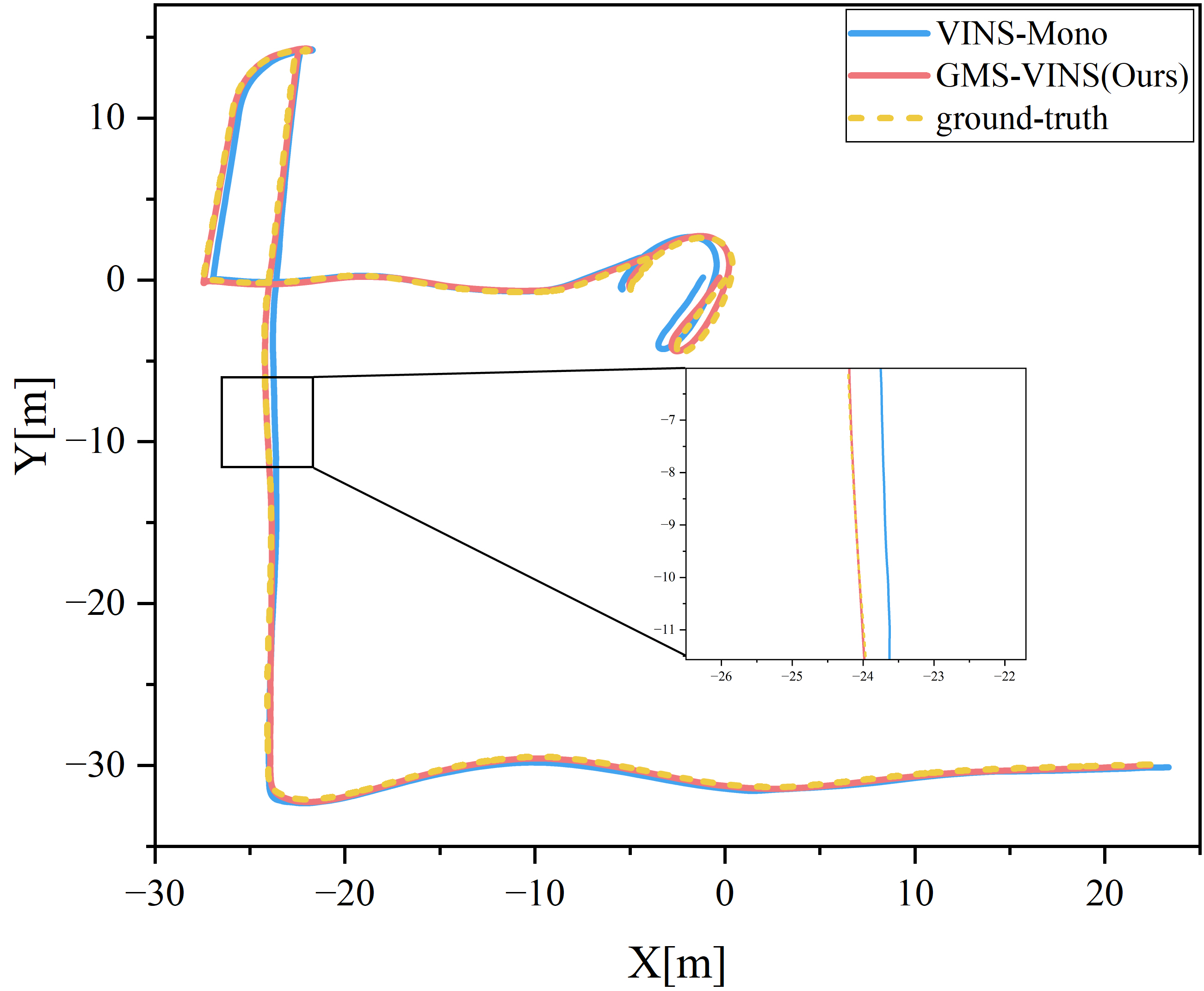

A RealSense D435i camera captures visual and inertial data for monocular visual-inertial SLAM. We collected an extensive outdoor dataset featuring a variety of moving objects, including pedestrians, cars, buses, motorcycles, and tricycles.

The dataset collected in real-world environments is publicly available at Huggingface .

BibTex Code Here